TL;DR: Let’s see how to build an AI-powered chat app using the WPF AI AssistView control and OpenAI’s GPT model. This blog covers project setup, OpenAI API integration, and ViewModel creation for managing conversations. You’ll learn to handle AI-generated responses, display typing indicators, and customize UI templates. Enhance your WPF app with an intuitive and dynamic chat experience!

Conversational AI is transforming user experiences across industries. This blog explores building an AI chat experience using Syncfusion’s innovative WPF AI AssistView control and OpenAI’s GPT model.

The WPF AI AssistView control simplifies developing AI-driven chat interfaces, handling user input, and switching templates efficiently. Integrating OpenAI’s GPT enhances these interfaces by generating human-like responses, creating a dynamic conversational AI.

Let’s get started!

Key features of WPF AI AssistView control

The key features of the WPF AI AssistView are as follows:

- Suggestions: Offers selectable response suggestions to expedite the flow of conversation.

- Typing indicator: Displays a loading indicator to represent asynchronous communication with AI services.

- Formatted responses: Visualizes responses using customizable templates.

- Styling: Allows customizing the appearance of both request and response items.

- UI virtualization: Optimizes performance for handling large conversations.

Building an AI-powered smart chat app using WPF AI AssistView

Let’s walk through the process of building an AI-powered chat app using the WPF AI AssistView and OpenAI GPT.

Step 1: Set up the project

To begin, create a new project using your preferred framework (WPF). Install the necessary packages for OpenAI API integration and include the WPF AI AssistView control in your project.

Step 2: Import the necessary dependencies

Import the following dependencies at the beginning.

using Microsoft.SemanticKernel.ChatCompletion;

using Microsoft.SemanticKernel;

using Syncfusion.UI.Xaml.Chat;Step 3: Create the OpenAI API key

We need an API key from OpenAI to interact with their GPT model. Then, configure your app to make API requests and handle responses from OpenAI. Here’s an example of how to set it up. Create:

- OPENAI_KEY: A string variable where we should add our valid OpenAI API key.

- OPENAI_MODEL: A string variable representing the OpenAI language model we want to use.

- API_ENDPOINT: A string variable representing the URL endpoint of the OpenAI API.

public class AIAssistChatService

{

private string OPENAI_KEY = ""; // Add a valid OpenAI key here.

private string OPENAI_MODEL = "gpt-4o-mini";

private string API_ENDPOINT = "https://openai.azure.com";

}Step 4: Define the AIAssistChatService class and configure the OpenAI API

The following is the code example for the AIAssistChatService class and an explanation of each part.

We declare a private field GPT of type ChatClient, which will store the chat client instance once it is initialized. The ChatClient interacts with the GPT model to send messages and receive responses.

The ResponseChat method handles sending a message to the GPT model and receiving its response.

- It takes a string line (the user’s input) as a parameter.

- The Response property is initially set to an empty string.

- The ResponseChat method then calls gpt.CompleteChatAsync(line) method to send the input message to the GPT model and wait for the response.

- Finally, the response is converted to a string and assigned to the Response property.

public class AIAssistChatService

{

IChatCompletionService gpt;

Kernel kernel;

public string Response { get; set; }

public async Task Initialize()

{

var builder = Kernel.CreateBuilder().AddAzureOpenAIChatCompletion(

OPENAI_MODEL, API_ENDPOINT, OPENAI_KEY);

kernel = builder.Build();

gpt = kernel.GetRequiredService();

}

public async Task ResponseChat(string line)

{

Response = string.Empty;

var response = await gpt.GetChatMessageContentAsync(line);

Response = response.ToString();

}

}Step 5: Create a ViewModel class

Now, create a ViewModel class with the necessary properties.

internal class AssistViewModel : INotifyPropertyChanged

{

AIAssistChatService service;

private Author currentUser;

private ObservableCollection<object> chats;

private bool showTypingIndicator;

public Author CurrentUser { get ; set ; }

public bool ShowTypingIndicator {get ; set ; }

public ObservableCollection<string> Suggestion {get ; set ; }

public ObservableCollection<object> Chats {get ; set ; }

public event PropertyChangedEventHandler PropertyChanged;

public void RaisePropertyChanged(string propName)

{

if (PropertyChanged != null)

{

PropertyChanged(this, new PropertyChangedEventArgs(propName));

}

}

public AssistViewModel ()

{

this.Chats = new ObservableCollection<object>();

this.CurrentUser = new Author() { Name = "User" };

service = new AIAssistChatService();

service.Initialize();

Chats.Add(new TextMessage

{

Author = new Author { Name = "Bot"},

DateTime = DateTime.Now,

Text = "I am an AI assistant.\n" +

"Ask anything you want to know",

});

} Step 6: Hook the chat collection change event

Hook the chat collection change event in the ViewModel constructor. Then, update the AI message response in the AI AssistView control.

public AssistViewModel()

{

this.Chats.CollectionChanged += Chats_CollectionChanged;

}

private async void Chats_CollectionChanged(object sender, System.Collections.Specialized.NotifyCollectionChangedEventArgs e)

{

var item = e.NewItems?[0] as ITextMessage;

if (item != null)

{

if (item.Author.Name == currentUser.Name)

{

ShowTypingIndicator = true;

await service.ResponseChat(item.Text);

Chats.Add(new TextMessage

{

Author = new Author { Name = "Bot", ContentTemplate = AIIcon },

DateTime = DateTime.Now,

Text = service.Response

});

ShowTypingIndicator = false;

}

}

}Step 7: Creating the AI AssistView UI

Let’s define the necessary XML namespaces and initialize the WPF AI AssistView control.

<syncfusion:SfAIAssistView

x:Name="chat"

CurrentUser="{Binding CurrentUser}"

Messages="{Binding Chats}"

ShowTypingIndicator="{Binding ShowTypingIndicator}">

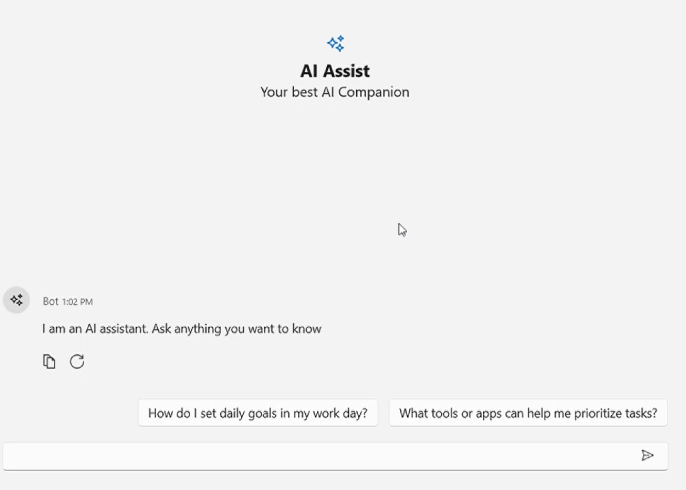

</syncfusion:SfAIAssistView>Step 8: Define a banner template

Finally, define a banner template as shown in the following code example.

<syncfusion:SfAIAssistView

x:Name="chat"

CurrentUser="{Binding CurrentUser}"

Messages="{Binding Chats}"

ShowTypingIndicator="{Binding ShowTypingIndicator}">

<syncfusion:SfAIAssistView.BannerTemplate>

<DataTemplate>

<StackPanel

Orientation="Vertical"

VerticalAlignment="Bottom"

Margin="0,10,0,0">

<TextBlock Text="AI Assist" FontSize="20" HorizontalAlignment="Center" FontWeight="Bold"/>

<TextBlock Text="Your best AI Companion" HorizontalAlignment="Center" FontSize="16"/>

</StackPanel>

</DataTemplate>

</syncfusion:SfAIAssistView.BannerTemplate>

</syncfusion:SfAIAssistView>

Refer to the following output image.

Conclusion

Thanks for reading! By combining OpenAI’s GPT models with Syncfusion WPF AI AssistView control, we can create sophisticated AI chat interfaces offering natural and intuitive user experiences. The WPF AIAssistView control simplifies key tasks such as message handling, template switching, and input management, making it ideal for building conversational apps. Whether you’re developing customer support bots, personal assistants, or interactive learning tools, this setup offers a strong foundation for building advanced AI chat systems.

Our customers can access the latest version of Essential Studio® from the License and Downloads page. If you are not a Syncfusion customer, you can download our free trial to explore all our controls.

For questions, you can contact us through our support forum, support portal, or feedback portal. We are always happy to assist you!